Beyond LLMs - Unlocking AI's next frontier

What will happen once AI gets access to real-world data

We’ve all seen how quickly large language models (LLMs) like GPT have improved. They’re getting better at understanding and generating text, and it’s easy to think that scaling up LLMs is the only way forward. But the truth is, LLMs are just scratching the surface of what’s possible with AI.

The next big opportunity lies in going beyond LLMs and tapping into the vast amount of real-world data that’s currently untouched by these models. I presented this a few weeks ago at Project A’s PakCon 2024, here’s the summary:

The Limitation of Text-Based Models

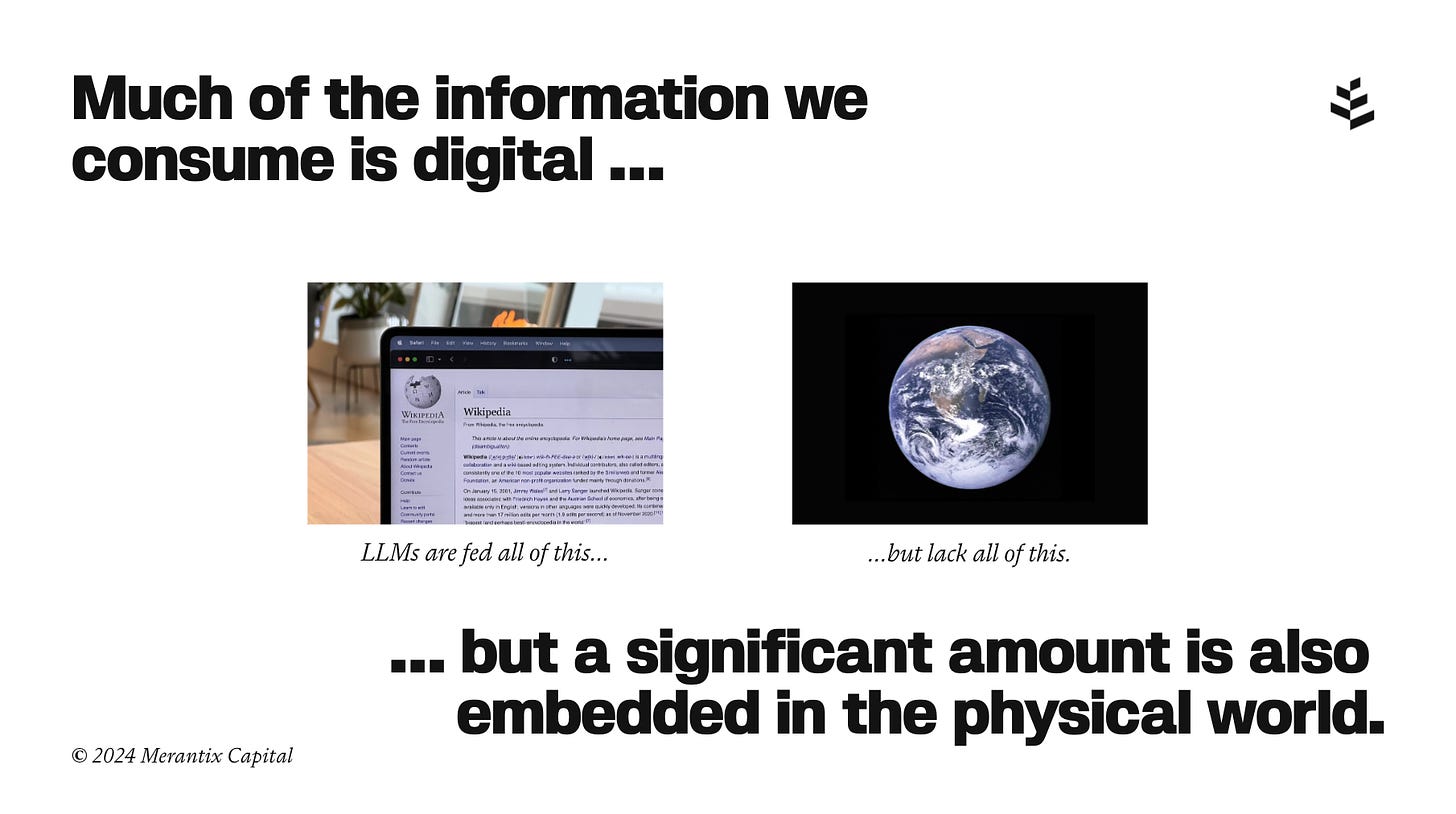

Today’s LLMs are impressive, but they all have one major limitation: they’re almost entirely fed with data from the web. Think about it—all the text, articles, and websites that form the backbone of training datasets are just a small fraction of the data that actually exists in the world. In fact, most of the meaningful data is found in the physical environment around us—data that LLMs haven’t even begun to touch.

Yes, datasets are getting bigger, chips are improving, and we’re throwing more compute power at the problem. But eventually, we’re going to hit a ceiling. There’s only so much original text content to mine from the web. If we really want to take AI to the next level, we need to go beyond what’s written and start processing the vast amount of non-verbal, real-world data that exists all around us.

Humans Don’t Just Use Text

Here’s a key insight: humans don’t just rely on text to make sense of the world. Around 90% of our sensory input is non-verbal—we see, feel, smell, and interact with our physical surroundings. AI needs to evolve in the same direction. If we want AI systems that can operate effectively in real-world environments, they need to process the same type of multimodal data that we do.

Think about autonomous cars. These vehicles generate massive amounts of data as they navigate the world, from sensor readings to camera footage, and all of it goes beyond text. AI models need to be able to process and learn from these kinds of data sources, which is where new technical tools like multimodality, zero-shot learning, and edge computing come into play.

Multimodality and Zero-Shot Learning: The Future of AI

The future of AI lies in multimodality—the ability to handle different types of data beyond just text, such as images, audio, video, and sensory data from the physical world. By building models that can understand and learn from this wide range of data, we’re opening the door to far more powerful AI systems.

Then there’s zero-shot learning—a technique where AI models can apply their knowledge to new data types they’ve never encountered before. This allows AI to generalize more effectively and handle situations that weren’t explicitly part of its training data. Imagine an AI system that can recognize and process new objects or events in real-time, even without prior examples.

And don’t forget about edge computing. By deploying hardware directly into real-world environments, we can push AI systems closer to the data they need to process. This not only increases efficiency but also allows for real-time decision-making in areas like manufacturing, robotics, and healthcare.

Real-World AI: The Untapped Opportunity

Here’s where the real opportunity lies: while LLMs are great at text-based tasks, the vast majority of data that could fuel the next wave of AI innovation is out there in the physical world. Yann LeCun famously pointed out that even a four-year-old child has processed 50 times more data than any LLM. That’s because humans interact with the real world, and the same needs to happen with AI.

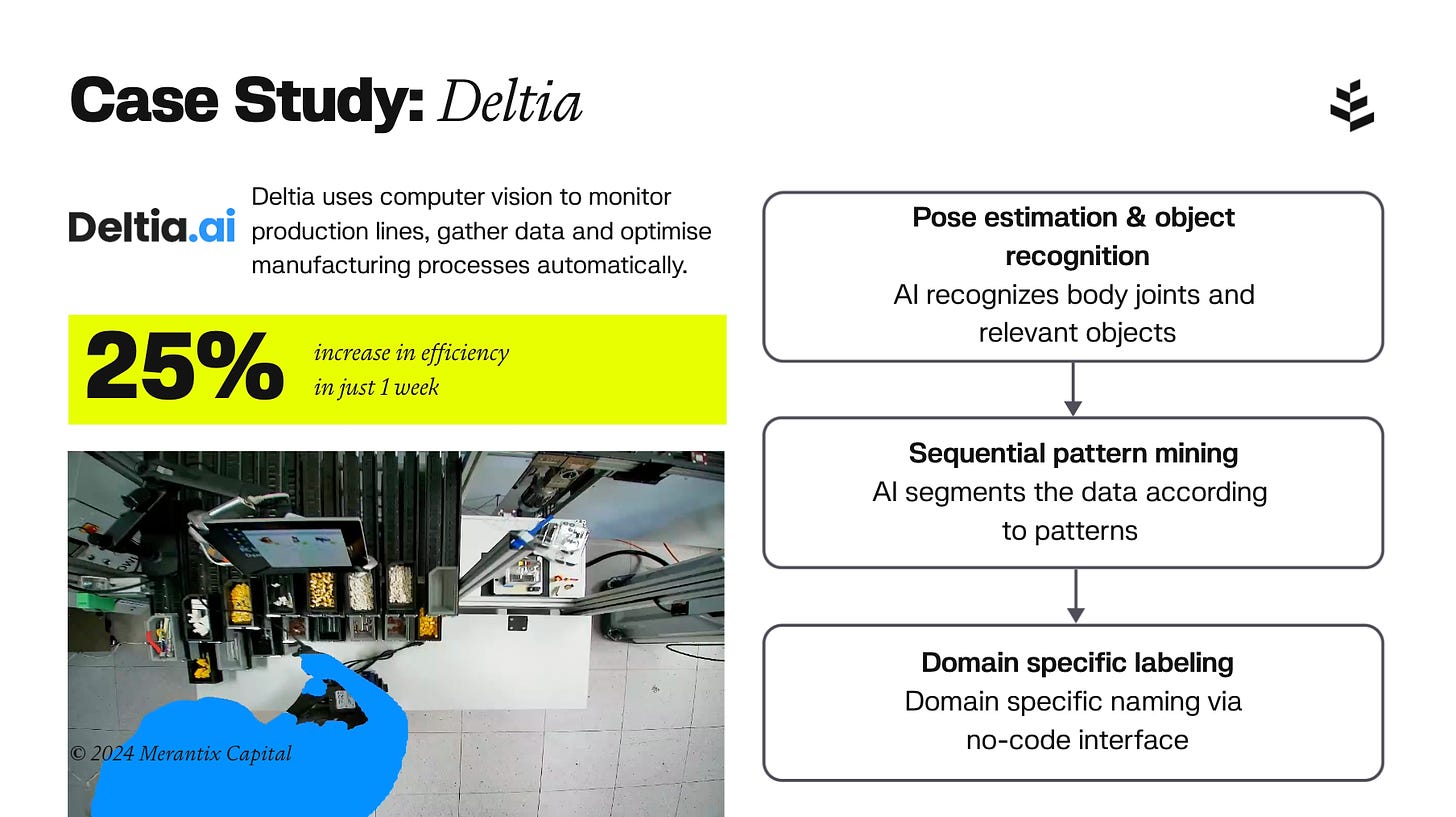

In sectors like manufacturing, healthcare, energy, and logistics, there’s an enormous amount of untapped data waiting to be processed by AI systems. For instance, one of our companies, Deltia, uses computer vision in factories to analyze workflows, making them more efficient. This data can eventually be used to train robots, further automating tasks and pushing AI into new areas.

And it’s not just about efficiency. There’s a wealth of insights, improvements, and innovations that can come from harnessing this data, and right now, most of it is going unprocessed. Every industry stands to benefit from AI that can understand and interact with the real world, and this is where Europe, in particular, has a unique opportunity.

Europe’s Unique Opportunity

Unlike the U.S. and China, which have largely focused on tech-centric AI developments, Europe has a rich industrial heritage that offers a distinct advantage. Many of the industries where AI can have the most immediate impact—such as manufacturing and logistics—are areas where Europe excels. While the race for LLMs may be led elsewhere, Europe has the potential to lead the next phase of AI development by applying domain expertise to real-world AI applications.

The key is to act now. As these industries embrace AI, there’s a window of opportunity for Europe to enhance and scale its expertise in these fields, making a global impact.

Unlocking AI’s Next Frontier

The future of AI isn’t just about making LLMs better. It’s about accessing and understanding the data that exists all around us in the physical world. By focusing on multimodal AI, zero-shot learning, and edge computing, we can unlock entirely new possibilities for innovation and business growth.

Whether you’re in manufacturing, healthcare, logistics, or any other sector with real-world data, the next big wave of AI is coming your way. All you need to do is prepare to tap into it.